Navigating the (Crypto) Information Maze

First this post goes over the macro factors that make navigating the modern information landscape a tough task. Second it goes over the additional factors which when combined with the already challenging information landscape make information management in crypto a doubly tough task. Third, it will outline the tools and strategies I’ve successfully used to navigate the information maze effectively. I hope these strategies will be valuable or at least start a dialogue or curation meme that others can thread their strategies to.

The post uses examples from crypto but hope it will be valuable for most other fields.

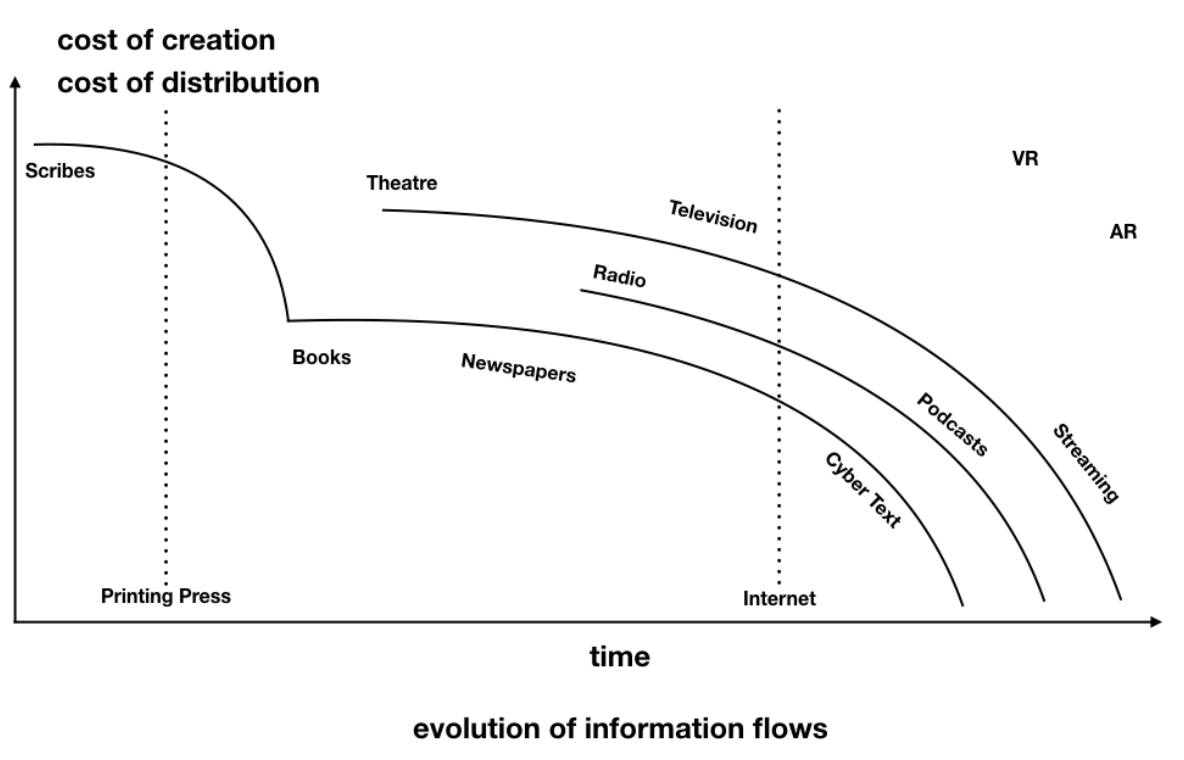

The chart above highlights how the internet has quickly accelerated information flows while also reducing the cost of producing and distributing information exponentially. For the sake of simplicity, the cost of creation and distribution are aggregated into one curve. Below I’ve expanded on how these exponentials affect information flows.

Another related factor of note, soon we will face the threat of algorithmically created information which will further worsen saturate the information landscape. Emerging technologies like VR / AR are still in their infancy with high cost of creation , distribution and high adoption cost for the user. VR/AR will also undergo similar eventually exponential decrease in cost curves adding to the saturated landscape. Additionally, the immersion of these mediums will be unparalleled making them very engaging and hard to resist.

Exponentials of Information

Cost of production is approaching 0

To create a piece of information that can reach millions one only needs a 100$ smartphone and an internet connection. Previously people needed access to a printing press.

Nick Tomaino on Crypto Information Asymmetries

Marginal cost of distribution is approaching 0

The internet enables information creators to distribute a piece of information with no additional effort to numerous people. Obviously this is different from consumption , where several nuances are involved including chance and algorithmic network effects. Side note : Information creation ( media or code ) can reach thousands, we’re in an age of infinite leverage. It’s like an army of robots that work for you while you sleep.

Cost of amplification is reaching infinity

Algorithmic feeds aggregate and amplify information at a massive scale. The potential to manipulate algorithmic systems can create infinitely costly results e.g. Russia meddling in the US elections. Additionally as the outlook of the ad driven algorithms are short term minded ( increase capitalist profits ) we sometimes get infinitely costly long term results.

Erik Torenberg on the Information Deluge

These three exponentials of production, distribution and amplification while offering infinite leverage to the individual creator, result in high % of low quality information and biased information reaching you. Couple this with the fact that information intake is effortless due to hardware innovation ( kindles , smartphones , airpods ) and software innovation ( text to speech ). You have potential for mass manipulation and echo chambers.

Furthermore, Information in crypto is fragmented across many different mediums due to the:- Plethora of modern communication tools available Perceived security benefits of secure platforms e.g. signal , keybase Geographical popularity , geographical censorship ( e.g. wechat ) Ideological support , dogfooding decentralized products ( e.g. matrix )

Due to the distributed and remote nature of crypto communities people communicate along a wide spectrum of synchronicities. From instantaneous chat and video calls to asynchronous forums. This fragmentation of platforms and varying synchronicities further adds to the information management demand of a crypto builder.

Filtering Processes

Once you’ve decided your area of interest you can start a systematic search for high quality information sources . In general your likely to find consistent highest quality sources from Twitter→Newsletters →Forums→Chat groups. Here are some tips I’ve found helpful to identify high signal sources.

When judging an individual information creator , I first judge the original information output (e.g. composed tweets , memes and posts ) and secondly the curational ability. How lasting, unique , differentiated and cohesive is the original information. Wise critiques and contrarian views are something I give more weight to. Secondly how strong is their curational ability in selecting underrated posts.

Additionally it’s important to judge the quality of each piece of information critically. Even trusted information creators are prone to errors in times of emotional stress, intoxication etc. It fits in with a concept I like to call modular agreement you don’t have to consume or agree with all the information from a trusted information creator.

As information creators gain followers they often succumb to greed to gain more followers or maintain current standing. This greed causes them to deviate from their originally insightful tweets and resort to tribal fortune cookie sounding aphorisms. It’s important to quickly realize this transition and discount these information creators accordingly.

Revenue Sources

Try and ascertain the revenue sources of each publication/source. As publications get larger and acquire greater reach the chance of interference from political interests and wealthy benefactors increase. Due to the opaqueness of holding structures the bias is not always visible. Another model to be careful of is publications with ad supported models. Most of these publications fall prey to trying to increase engagement even if harmful.

Layers of Abstraction

As publications get larger or more algorithmically driven the many layers of curation / editing get increasingly complex. This abstraction makes it harder to evaluate the authenticity or intentions of the information. With human curated publications like big newspapers or magazines there many layers of human curation and directives guiding the final output. In algorithmically curated platforms there is a chain of human directives plus the sometimes black box like nature of machine learning algorithms.

Permissionlessness / Configurability of Distribution

Sources such as cable news , newspapers and magazines have been traditionally much harder to contribute to and refactor / distribute into different formats . This is changing with OCR , smartphone photos , video editing software. Whereas writing and distributing the contents of a book is permissionless. This permissionless and configurability of distribution is double edged sword increasing volume of information but importantly shifting the burden of curation onto the consumer. In publications where the burden of curation is outsourced to the publication, the chances that unauthentic information sandwiched amongst other higher quality pieces is high. Also once a brand is elevated in a consumer’s mind lower quality authors or changing interests under that umbrella may lead to adverse changes in the quality of information.

Spectrum of Synchronicities

Native internet information exists on a spectrum of synchronicity ( from asynchronous ( forums ) to synchronous ( periscope streams , webinars , live chats )) and varies in richness from text to video and soon AR + VR .

Generally curation and mindful consumption is always easier when you are able to consume the information in an asynchronous manner. This delay allows you gather further signals about the piece of information and also allows you to be in the right frame of mind to consume that piece of information.

“In the long-run (and often in the short-run), your willpower will never beat your environment.” - James Clear

One must create strong filters and processes to create an environment that enables seamless information management having to resort to willpower frequently.

Depending on the desired breadth and width of an information spectrum you desire you will have to assess and outsource trust to high quality individuals outside your area of expertise. All of us do this informally , but one needs to systematize and stay consistent.

Platform Tips

For Twitter

High % of original tweets Including an original caption or take when sharing a link Retweeting non viral re-tweets signals depth of curation ability Lack of hot takes on trending news Insightful comments on other tweets Original thoughtful profile picture and bio text

For Newsletters

High % of original writing before links section e.g. Proof of Work Original take or commentary on links shared e.g. Token Economy Strong or orthogonal curation of links which are not just the most popular posts of the week e.g. Week in Ethereum

Do let me know of any tools or processes you use to curate information on twitter @divraj. Sharing these insights in public will help improve are meta curational engine ever slightly in aggregate making the crypto and greater sphere more antifragile.

Adjacent Areas , Inefficient Formats

In adjacent areas e.g. protocol research for an investor it may be more efficient to read high quality summaries on twitter or newsletters. E.g. zK Capital Research

Most books tend to be inefficient formats for consumption. To get critical information from books of interest before reading completely. One can follow the process of ‘fractal reading’.

Summaries - from wikipedia , blinkist or twitter storms

Reviews by media

Interviews with the author

Podcasts with the author - incase there are multiple reviews as is sometimes the case feel free to skip additional podcasts.

Goodread and Amazon reviews

Systems

Use Airtable or Google Sheets etc to create a record of all the information you intake and reflect on each bit. This idea was suggested by Arjun Balaji. Recording will force you to further smartly curate your information intake as it creates a further cognitive barrier / skin in the game. As you rate and analyze each piece in writing you will further develop your curational ability. Depending on the level of information you record you can soon possibly surface analytical insights similar to Stephen Wolfram .

Tools

Tabs - Use tabs to queue pieces of information rather than reading immediately. This gives you time to further reassess whether the piece of information is worth reading. Curating while reading through shorter form news sources like Twitter requires a different cognitive state than reading longer form articles. Queing up the articles to read in batch lets you reach the desired optimal reading state. A further tip, arrange the tabs in most anticipated order in one direction in the browser this helps prioritize the most important reads first and often leads to discarding unnecessary reads.

Nuzzel - Combined with Twitter lists Nuzzel is a powerful to tool to curate and use as a signal in your curational engine. You can quickly assess the number of shares and the additional sentiment added while sharing the article. Here’s my mega crypto twitter list which you can use for Nuzzel Divraj’s Master Crypto List .

Pocket - Pocket is great for reading offline like planes or nature retreats. It is also a great tool to skip reading many pieces of information you think are useful but really not. Most borderline pieces are better sent here rather than queued up in tab. Notion - Use Notion to record your rules and processes. Sheets or Airtable - To implement curational systems mentioned above. This information curation niche seems like an opportunity for a product. Refind tried something along the lines and technically pocket could add this functionality. Bookmarks ( here Brave ) - Create bookmarks to your favorite crypto information sources and communities.

Refining your Curational Network

Like any good neural network you have to clean , annotate , seek unique data and improve base algorithms your ‘curational network’ to create unique and consistent outputs.

The Twitter strategy suggested below is based on reading tweets in lists eliminating the algorithmic curation of twitter. A lot of insightful tweets tend not have significant retweets or likes which would exclude them from being surface by twitter’s algorithm. Most popular tweets tend to be successfully memetically packaged. It’s an opportunity to have an original edge over the primarily follower based twitter users. These users are all likely to see the same amplified tweets from a similar follower set while missing out on many less viral tweets.

I intend to switch to a follower based algorithmic approach and include the results in a follow up post. With Evan Van Ness’s suggestions of blocking liked tweets it promises to be an interesting experiment. The result of having a larger web of followers and an algorithmic filter could likely expose you to only the high quartile popularity tweets vs all the tweets of a few high quality creators.

Here are similar tips from another user

I recommend consuming Twitter in batches in oldest first order. This gives you an idea of how many tweets await and allow you to adjust consumption speed based on time constraints. In the brief scan over while scrolling you get an idea and build anticipation for exciting areas. In addition if your browser crashes or your consumption session is interrupted you have a bookmark in hours of where to resume without missing out any tweets.

Twitter Lists

Create 2-3 lists for your primary area of interest to break into batches of consumption. The primary list with the highest quality creators can be read with priority and other secondary lists can be skipped in case there is no further time in the day. I also create a list ‘min’ for travel when time or data is extremely limited.

One can try segregating the lists into niches and observe results. With the low number of high quality creators this may result in too much list fragmentation. Also I’ve found is more stimulating to have a bit of diversity in the feed.

One can experiment with different sequences of consumption to realize maximum efficiency and avoid overlap of content sources and niche news. Consuming nuzzel before twitter gives you a good broad perspective of the daily highly shared content. While browsing through Nuzzel also do check peoples takes while sharing the tweet. Nuzzel displays all the tweets on expanding the selected article. This replicates algorithmically the service Techmeme does so well for tech industry news.

E.g. Sequences to Evaluate

Nuzzel → Twitter → Communities Twitter → Nuzzel → Communities

Using multiple input signals to decide whether to further read through a piece of content is something you should consider. Based on your niche , size of following you may arrive at different criteria to signal consumption.

E.g. Conditions

Twitter + Nuzzel required Original tweet > 3 Retweet1 + Retweet2 + Nuzzel

These recommendations are a result of a small following on twitter. I suspect the strategy for triaging a large following will involve several additional strategies and complexity.

Reacting to Conversations

Building strong IRL filters and tactics is an underrated part of the personal information strategy. Whether it is chats or discussions in IRL both can have similar damaging effects. I’ve heard people mention imagining people on Twitter as real people to avoid toxicity , one should maintain the same the adaptability the other way around imagining real world conversations as tweets specially in conferences or new settings. When someone speaks about clearly detrimental information reenforce to your to them that such information is not of consequence and also learn to use as a reminder to keep your curational guard up

Additional Tips

Stop watching any television news channel - They are highly biased and focused on boosting engagement through outrage and debasement. Their direct broadcast nature also doesn’t allow for the information market to develop any signal on the quality of information.

Stop reading any content directly from a publications home page. Static homepages are a relic of the newspaper age. High quality content is often packaged with paid and low quality content leading to undesired information intake. Any piece of content should satisfy conditions as outlined in the curational engine above. Facebook has betrayed people’s trust numerous times and abused data for profit. I would recommend deleting your Facebook, Instagram and Whatsapp accounts. If you have to use facebook use the kill newsfeed plugin to remove the newsfeed.

Leave or disregard any content from spammy chat groups of family members or friends

Both Morgan Housel and David Perell have written about the information intake maxim ‘will this information I’m reading still be valuable in 3 , 5 or 15 years only then should consume it’.

Wrapping Up

In conclusion, I’ve highlighted the factors that make information management in crypto a challenging task. The fundamentals of producing and distributing information are converging to free resulting in high % of biased / low quality information. In addition crypto is harder due to fragmented platforms and largely distributed remote contributors. I then went over the prevalent structures of information and methods of distributing information . Subsequently I outlined strategies to build and refine your curational engine.

I realize that some of the suggestions here may seem extreme but I like to see it as the information management equivalent of the mostly meat ‘carnivory’ diet. In a world of abundant harmful food and propaganda by big food the carnivory diet generates superior health outcomes through strong discipline and curation.

I hope this article will spark further discussion on information management in crypto and result in more people open sourcing their strategies to the community. I’ve opened a repo on Github with the format for the google sheet and list of tools. Do include pull requests with suggestions or improvements.

Basic Curational Format in CSV

Recommended Reading

Venkatesh Rao has written about the optimal amount of reliance and method of connecting with the internet. I highly recommend reading through his arguments Against Waldenponding & Against Waldenponding 2